In French offices, a silent revolution is underway. According to a 2023 IFOP-Talan study, 68% of French employees using artificial intelligence tools do not report it to their management. More alarmingly, nearly 50% of employees turn to unapproved AI tools to accomplish their tasks, according to the 2025 Zendesk report. This phenomenon, dubbed “Shadow AI,” today represents one of the most underestimated threats to organizational cybersecurity.

For executives, CIOs, and CISOs, understanding and controlling Shadow AI is becoming an absolute priority. The financial consequences amount to hundreds of thousands of euros: according to the 2025 IBM report, Shadow AI increases the average cost of a data breach in France by 321,900 euros. Beyond the financial aspects, this phenomenon raises critical issues of regulatory cybersecurity compliance, cybersecurity governance, and AI ethics. Let’s discover how to identify, understand, and control this emerging threat together.

Understanding Shadow AI: Anatomy of a Massive Phenomenon

Definition and Scope of Shadow AI

Shadow AI refers to the use of artificial intelligence tools (chatbots, code or content generators, external cloud solutions) by employees without validation from the Information Systems Department or integration into security processes. In other words, an AI operating outside the company’s official policies.

This phenomenon is a continuation of traditional Shadow IT but has characteristics that make it particularly concerning. Unlike classic unauthorized applications, generative AI tools directly process sensitive content: strategic reports, source code, customer data, confidential information.

In certain particularly exposed sectors like financial services, the use of Shadow AI has literally exploded, recording a 250% increase in one year. And this is not an isolated phenomenon: 93% of agents who use these tools use them regularly, creating a worrying operational dependency.

The Motivations Behind Uncontrolled Adoption

Understanding why employees turn to Shadow AI allows for a better grasp of the phenomenon and for providing appropriate responses.

• The quest for efficiency: Employees seek to improve their productivity in the face of growing workloads.

• The delay of internal solutions: As Michel Cazenave, CISO at PwC, explains, “Shadow IT is the name we give to the inventiveness of users in finding solutions when we don’t provide them with any.”

• The accessibility of tools: Many AIs are available online for free or via personal subscriptions. A few clicks are enough to access powerful capabilities, without waiting for a validation that can take weeks or months.

• Lack of awareness of risks: Many employees are unaware of the security and regulatory implications of their usage. They perceive these tools as simple assistants.

This understanding of motivations is essential for building an adapted response that is not limited to prohibition but proposes viable alternatives.

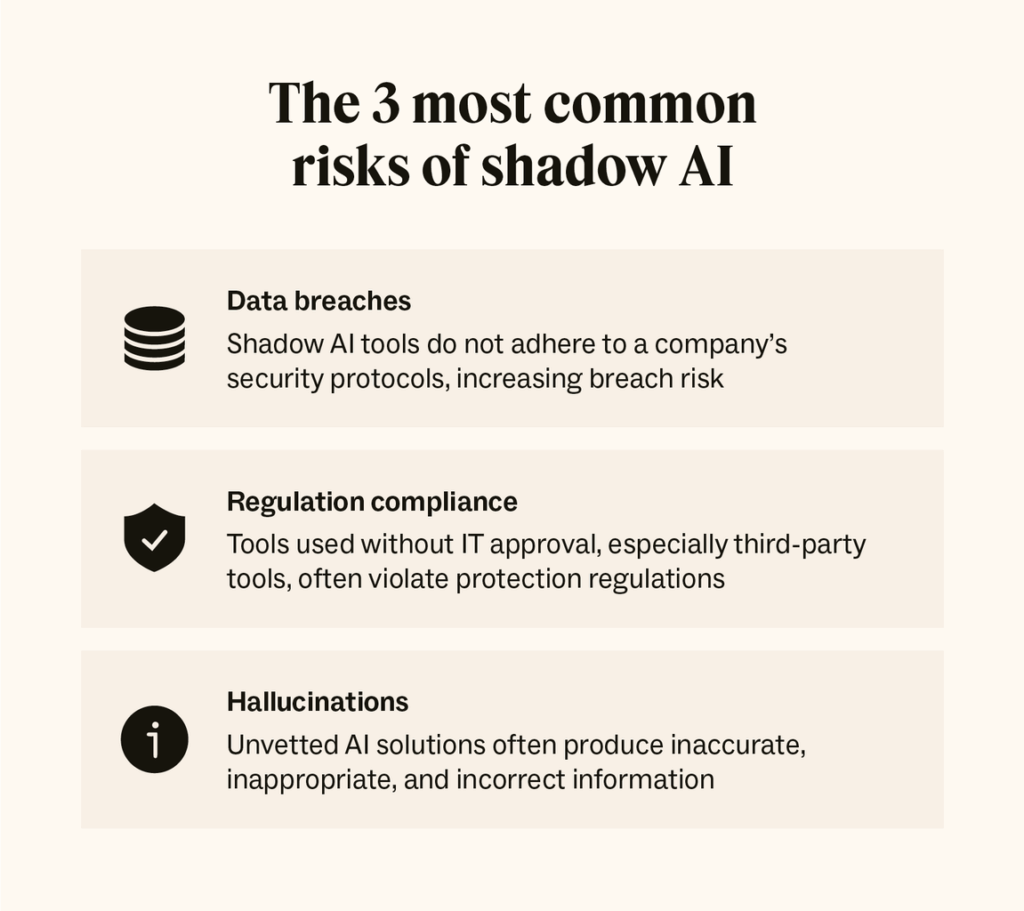

The Multidimensional Risks of Shadow AI

Exposure of Sensitive Data and Information Leaks

The primary and most critical risk concerns data security. When employees use external AI tools to process internal information, this data can be stored on third-party servers, often located outside of Europe, without guarantees of confidentiality or deletion.

Concrete cases illustrate the severity of these risks. Samsung had to ban the use of a globally known AI after employees inadvertently disclosed confidential information, including proprietary source code and strategic data.

According to the 2025 IBM Cost of a Data Breach Report, companies with a high level of Shadow AI see their costs increase by $670,000 compared to those with little or none. More alarmingly, 20% of global data breaches now involve Shadow AI systems.

Cybersecurity audits often reveal considerable blind spots: security teams cannot protect what they cannot see. Shadow AI multiplies these gray areas, making cybersecurity maturity assessments partial and misleading.

Regulatory Non-Compliance and Legal Risks

The unregulated use of AI directly exposes the company to risks of non-compliance with current regulations, with major financial and reputational consequences.

• GDPR Violations: Processing personal data via unapproved tools can constitute a serious GDPR violation. Sanctions can reach 4% of global turnover or 20 million euros.

• NIS2 Non-Compliance: The Network and Information Security 2 directive imposes strict control over the digital supply chain and data processing on essential and important operators. Shadow AI creates undocumented and unsecured dependencies, incompatible with these requirements.

• AI Act Obligations: The AI training obligation provided for in Article 4 of the AI Act has been in force since February 2025. According to the European Commission’s FAQ of May 2025, an adapted training program must cover general AI culture, the company’s role, specific risks, and use cases. Shadow AI completely escapes this framework.

• Regulated Sectors: Highly regulated sectors such as healthcare, finance, and legal services are particularly vulnerable. In these areas, the use of Shadow AI has increased by over 200% in one year.

Beyond direct financial sanctions, 13% of IT leaders report having suffered financial, customer, or reputational damage following incidents related to Shadow AI. The reputational impact can be devastating and lasting.

Technical Vulnerabilities and Cyberattacks

Shadow AI significantly expands the company’s attack surface. Each unvalidated tool constitutes a potential gateway for cybercriminals, who adapt their techniques to this new reality. The results can be:

• Compromised source code (Copied from an AI in a context of improvement that backfires on the developer)

• Prompt injection and exfiltration: Deployed LLM models can be the target of prompt injection attacks allowing the extraction of sensitive information.

• Phishing and social engineering: Generative AI has become the cybercriminal’s social engineering machine.

Strategies for Detecting and Controlling Shadow AI

• Identify Unauthorized Usage in Your Organization (Network flow analysis, workstation audits, anonymous surveys and questionnaires, production analysis, in-depth IT security review).

• Build Robust AI Governance (Define a clear usage policy, establish an AI governance committee, implement rapid validation processes (-15 days), document and track, integrate into internal security control).

• Offer Secure and Performing Alternatives (Deploy an internal sovereign AI, propose a supervised AI platform, develop specialized business assistants, simplify access to validated tools…).

The cybersecurity maturity assessment must now explicitly integrate the Shadow AI dimension, with specific indicators of visibility and control, the cybersecurity action plan must explicitly integrate the deployment of these alternatives as a strategic priority, with clear resources and deadlines.

• Train, Raise Awareness, and Support Change (General AI training, risk awareness, authorized tool training).

The training obligation provided for by the AI Act reinforces the necessity of these programs, transforming a good practice into a regulatory obligation.

• Create a Culture of Responsibility and Transparency (Clear top-down communication, encourage reporting (without risk of sanctions), recognize innovation, ethical accountability, shared feedback).

Beyond technical training, develop an organizational culture where everyone feels responsible and free to express their needs and practices.

Conclusion: Transforming a Threat into a Strategic Opportunity

Shadow AI today represents one of the most underestimated threats to organizational cybersecurity. With 68% of French AI users not reporting their usage, nearly 50% of employees resorting to unapproved tools, and additional costs of over 320,000 euros per data breach, the scale and impact of the phenomenon can no longer be ignored.

For executives, CIOs, and CISOs, the response cannot be limited to prohibition. Shadow AI reveals a mismatch between employees’ operational needs and the solutions proposed by the organization. Building effective cybersecurity governance requires recognizing this reality and providing pragmatic answers.

Controlling Shadow AI is part of a broader approach to responsible digital transformation, integrating good IT governance practices, compliance with regulatory cybersecurity (GDPR, NIS2, AI Act), and AI ethics principles. Organizations that succeed in this transformation will turn a threat into an opportunity.

DYNATRUST has integrated its own sovereign AI into its platform, which draws its sources from no existing AI. This solution guarantees total control of your data, native GDPR compliance, and complete technological independence.

Is your organization exposed to Shadow AI without knowing it? Our cybersecurity governance experts support you in assessing your cybersecurity maturity. Contact us to carry out a comprehensive corporate IT audit and develop a cybersecurity action plan transforming this threat into a competitive advantage.

Benefit from our expertise in regulatory cybersecurity compliance and AI ethics to accelerate your responsible digital transformation and strengthen your leadership position in your market.